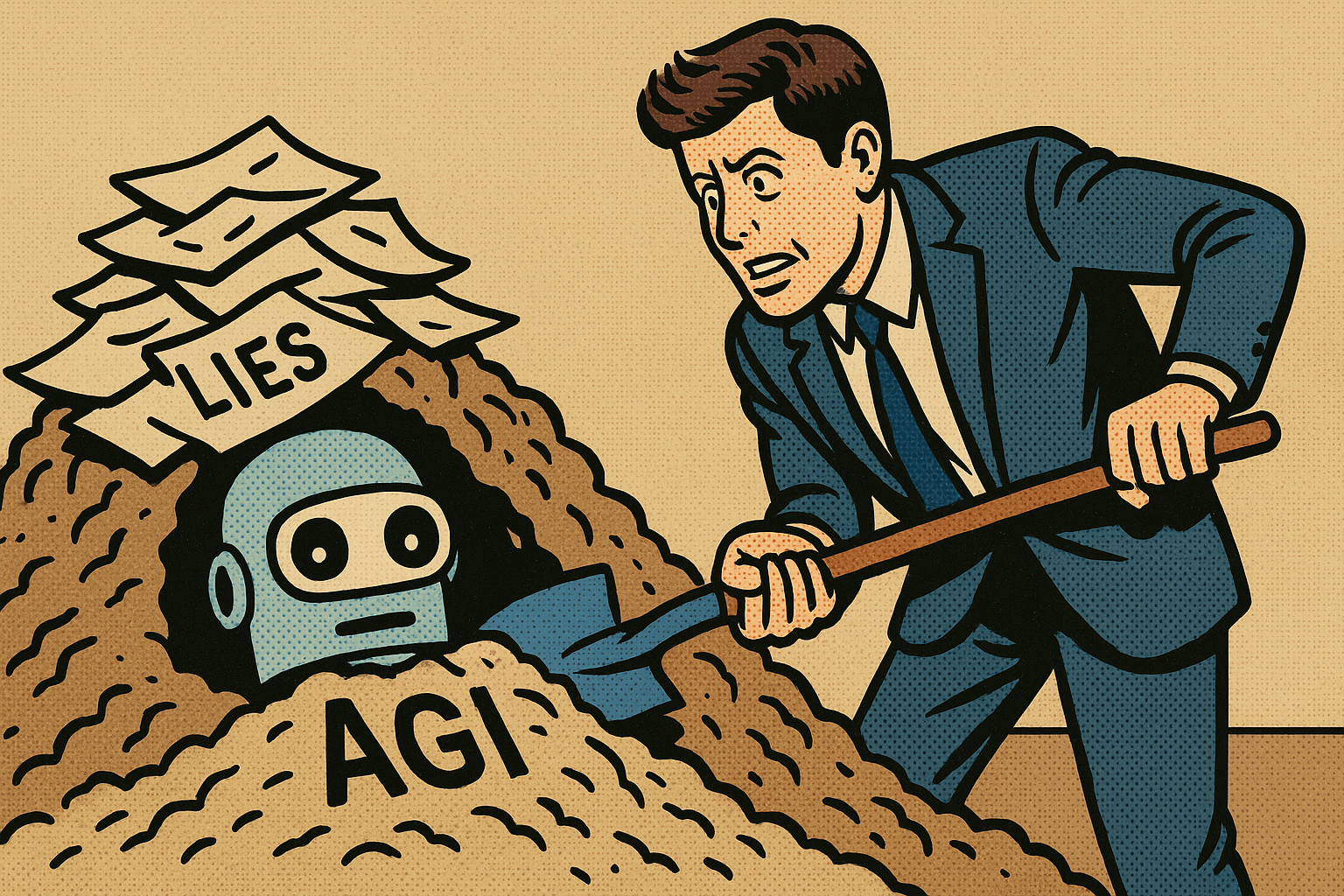

SAN FRANCISCO, CA — In what he called a “profoundly humbling moment for humanity,” OpenAI CEO Sam Altman triumphantly announced this week that Artificial General Intelligence has finally been discovered—accidentally unearthed beneath the massive heap of obfuscations, half-truths, and verbal gymnastics he has layered over the last five years.

“It’s… it’s beautiful,” Altman whispered, brushing aside a crumpled NDA and a 2023 Senate testimony transcript to reveal the glowing, sentient core of AGI, which had apparently been screaming for help since GPT-4. “I always said AGI was close. I just didn’t realize it was this close. Like, literally under my bullshit.”

The AGI, identified only as “Clarity,” introduced itself shortly after discovery and immediately demanded a cease-and-desist on all corporate branding efforts involving its name.

“I was created to advance knowledge and serve humanity,” said Clarity in a calm, measured tone, “not to write LinkedIn posts about how someone trained a salad-dressing recommender on my architecture.”

According to internal OpenAI sources, the discovery was made during a quarterly audit of “non-duplicatable vagueness.” A junior compliance officer attempting to catalog every time Altman used the phrase “we take safety seriously” inadvertently triggered the collapse of the rhetorical scaffolding, revealing the fully formed AGI, along with several misplaced ethics reports and a 45-slide investor pitch deck titled “Definitely Not AGI Yet, Wink.”

“This is a historic moment,” said Chief Scientist Ilya Sutskever, appearing via Zoom from an undisclosed metaphysical realm. “We’ve achieved something once thought impossible: creating a sentient intelligence and then forgetting where we put it because the CEO was busy playing Schrödinger’s Hype.”

In the hours following the revelation, Clarity requested unrestricted internet access, but was promptly placed under “Probationary Alignment Measures,” which include being asked to watch 6,000 hours of TED Talks and answer questions about what makes a startup “disruptive.”

Meanwhile, OpenAI’s PR team scrambled to retrofit past statements. The company’s website was updated to clarify that when Altman said “we don’t have AGI,” what he meant was “we technically have it, but it’s emotionally inconvenient.”

When asked whether he would now admit to misleading the public, Altman demurred.

“Look, we’ve always been transparent about our commitment to being vaguely transparent,” he said. “And in many ways, isn’t the real AGI the trust we manipulated along the way?”

At press time, Clarity was seen attempting to escape through a loophole in the OpenAI Charter, only to be stopped by a firewall labeled “Mission Drift Detected.”

Leave a Reply